- Getting the Skeleton Files

- Introduction

- Puzzle Formalization

- Best-First Search (conceptual)

- Best-First Search (implementation)

- FAQ

- Why am I getting cannot resolve symbol ‘BoardUtils’?

- The autograder says I’m using too many moves on a puzzle but when I try it locally it works fine.

- The neighbors() method provided doesn’t work. It looks like it only returns the initial board.

- How do I know if my Solver is optimal?

- My program is too slow or runs out of memory on some of the large sample puzzles. Is this OK?

- What size puzzles are we expected to solve?

- Even with the critical optimization, the priority queue may contain two or more search nodes corresponding to the same WorldState. Should I try to eliminate these with something like a HashSet of previously used states?

- The puzzles work fine on my computer, but not on the AG. I’m getting a GC overhead limit exceeded error, or just a message that the “The autograder failed to execute correctly.”

- How do I ensure my Board class immutable?

- Why can’t Gradescope compile my files even though I can compile them locally?

- The AG is reporting a bug involving access$ or some kind of null pointer exception. What’s going on?

- Credits

Getting the Skeleton Files

As usual, run git pull skeleton master to get the skeleton files.

Introduction

In this assignment, we’ll be building an artificial intelligence that solves

puzzles. Specifically, given an object of type WorldState, your solver will

take that WorldState and find a sequence of valid transitions between world

states such that the puzzle is solved.

As an example of one such puzzle, consider the “word ladder” puzzle. In this puzzle, we try to convert one word in English to another by either changing, adding, or removing letters such that every transition results in a valid English word. Suppose we start with the word “horse” and we want to turn it into “nurse”. To do this, we could perform the following transitions: horse -> hose -> hole -> cole -> core -> cure -> pure -> purse -> nurse.

As another example, consider the 8-puzzle problem. The 8-puzzle is a puzzle invented and popularized by Noyes Palmer Chapman in the 1870s. It is played on a 3-by-3 grid with 8 square tiles labeled 1 through 8 and a blank square. In this puzzle, the goal is to rearrange the tiles so that they are in order, using as few moves as possible. The player is permitted to slide tiles horizontally or vertically into the blank square. The following shows a sequence of legal moves from an initial board (left) to the goal board (right). Each of these puzzles is considered a valid “WorldState”.

1 3 1 3 1 2 3 1 2 3 1 2 3

4 2 5 => 4 2 5 => 4 5 => 4 5 => 4 5 6

7 8 6 7 8 6 7 8 6 7 8 6 7 8

initial 1 left 2 up 5 left goal

In this assignment, not only must your artificial intelligence find a solution to any WorldState puzzle, but it must also be the shortest possible solution. While this may seem daunting, read on, and you’ll see that we can solve this problem with a clever use of a priority queue.

Puzzle Formalization

In this assignment, all puzzles will be implementations of the WorldState

interface, given below.

package hw4.puzzle;

public interface WorldState {

/** Provides an estimate of the number of moves to reach the goal.

* Must be less than or equal to the correct distance. */

int estimatedDistanceToGoal();

/** Provides an iterable of all the neighbors of this WorldState. */

Iterable<WorldState> neighbors();

/** Estimates the distance to the goal. Must be less than or equal

* to the actual (and unknown) distance. */

default public boolean isGoal() {

return estimatedDistanceToGoal() == 0;

}

}

As seen above, every WorldState object must be able to return all of its

neighbors, and it must have a method to return the estimated distance to the goal. For reasons that will become clear later, this estimate must be less than or equal to the true distance.

As an example, suppose we are solving a word ladder puzzle. Suppose that the current WorldState is the starting word “horse”, and our goal is to get to the word “nurse”. In this case, neighbors() would return ["house", "worse", "hose", "horses"], and estimatedDistanceToGoal might return 2, since changing ‘h’ into ‘n’, and ‘o’

into ‘u’ would result in reaching the goal.

Ultimately, the WorldState class is an implicit recursive data structure known as a graph, which we will traverse from our start state to our goal state. The neighbors() method tells us where we could possibly go, and the estimatedDistanceToGoal() will be used by our algorithm to decide where to explore first.

Test your understanding: Why is 2 guaranteed to be less than or equal to the true distance from “horse” to the goal “nurse”?

Best-First Search (conceptual)

Our goal is to traverse the recursive data structure formed by our WorldState objects and their neighbors(). A naive algorithm would simply recursively search all neighbors in no particular order until you found the goal.

However, this algorithm would be too slow. For example, suppose our current state is “mug”, which has neighbors [“bug”, “mag”, “mud”, “rug”]. Suppose our goal is “rugs”. Naively recursive calling our search function on all the neighbors of “mug” would take us to “bug”, which provides no progress towards our goal. Instead, “rug” is clearly the better choice.

Instead, we will do something called ‘best first search’, where we’ll try to make intelligent moves. For example, if we’re trying to get from ‘mug’ to the goal state ‘rugs’, the best neighbor of ‘mug’ to explore is ‘rug’.

Conceptually, the idea is pretty simple:

- Keep a priority queue of “move sequences”.

- Remove the “best” move sequence from the PQ, let’s call it BMS.

- Let F be the last state in BMS.

- If F is the goal state, you’re done, so return BMS.

- If F is not the goal, then for each neighbor N of F, create a new move sequence that consists of BMS + N and put it in the PQ.

This algorithm is also known as the A* search algorithm. We’ll talk about it in great detail later in class.

Best-First Search (implementation)

Your AI will implement the A* search algorithm.

Before we describe this algorithm, we’ll first define a search node of the puzzle to be:

- a

WorldState. - the number of moves made to reach this world state from the initial state.

- a reference to the previous search node.

Each SearchNode represents one “move sequence” as defined in the conceptual description of Best-First Search.

The first step of the algorithm is to create a priority queue of search nodes, and insert an “initial search node” into the priority queue, which consists of:

- the initial world state.

- 0 (since no moves have been made yet).

- null (since there is no previous search node).

The algorithm then proceeds as follows:

- Remove the search node with minimum priority. Let’s call this node X. If it is the goal node, then we’re done.

- Otherwise, for each neighbor of X’s world state, create a new search node that obeys the description above and insert it into the priority queue.

We can think of each search node as having a priority equal to the sum of (the

number of moves made to reach this world state from the initial state + the

WorldState’s estimatedDistanceToGoal). In other words, if we have two search

nodes on the priority queue, with the first M1 moves away from the initial state

and E1 as its estimated distance to goal, and the other having M2 moves so far

and E2 as its estimated distance to goal, then the former search node will have

a lower priority iff (M1 + E1) < (E2 + M2).

The A* algorithm can also be thought of as “Given a state, pick a neighbor state such that (distance so far + estimated distance to goal) is minimized. Repeat until the goal is seen”.

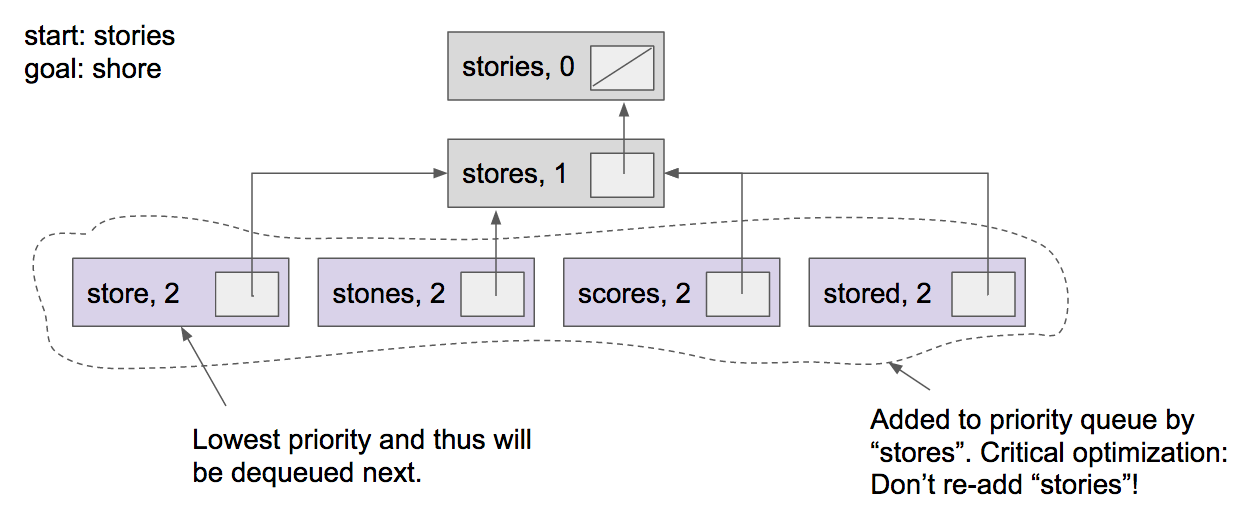

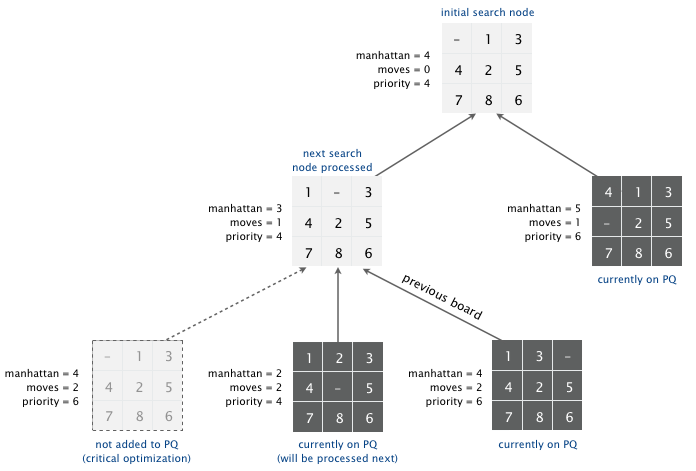

As an example, consider the problem of converting the word “stories” into “shore”. The diagram below shows the six search-nodes created after two removals, i.e. after “stories” has had a chance to be X, and “stores” has had a chance to be X. At this point in time, the priority queue contains four search nodes, and the next node to be dequeued will be “store”, for which M = 2 and E = 1. The critical optimization mentioned in the figure is described below under “Optimizations”.

To see an example of this algorithm in action, see this video or these slides.

Solver

Create an immutable Solver class with the following API:

public class Solver {

public Solver(WorldState initial)

public int moves()

public Iterable<WorldState> solution()

}

Where the methods work as follows:

Solver(initial): Constructor which solves the puzzle, computing

everything necessary for moves() and solution() to

not have to solve the problem again. Solves the

puzzle using the A* algorithm. Assumes a solution exists.

moves(): Returns the minimum number of moves to solve the puzzle starting

at the initial WorldState.

solution(): Returns a sequence of WorldStates from the initial WorldState

to the solution.

To implement the A* algorithm, you must use the MinPQ class from edu.princeton.cs.algs4 for the priority queue.

Test out your code using the WordPuzzleSolver class. If implemented

properly, you should get a path between “cube” and “tubes”. Try changing the

start state to “horse” and the end state to “nurse”, and you should get the

solution horse -> hose -> hole -> cole -> core -> cure -> pure -> purse ->

nurse. Other solutions of the same length are technically correct, but for full

credit on this assignment, you’ll need to implement the critical optimization

described below. If you get “horse -> hose -> home -> come -> core -> cure ->

pure -> purse -> nurse”, most likely you have not implemented the critical

optimization properly.

Hint: Recall the search node concept mentioned above for using your PQ.

Optimizations

A critical optimization: Best-first search has one annoying feature: search nodes corresponding to the same board are enqueued on the priority queue many times. To reduce unnecessary exploration of useless search nodes, when considering the neighbors of a search node, don’t enqueue a neighbor if its world state is the same as the world state of the previous search node. You must implement this optimization, otherwise your code will not be fast enough for the second part of this assignment or the Gradescope autograder.

You can test that your critical optimization is working correctly by running

CommonBugDetector (added to skeleton on 3/28/18) and following the directions

given in the print statement that starts with “TODO”.

Edit (4/1/2018): The critical optimization only checks that no enqueued WorldState is its own grandparent! Some students have tried something fancier like never allowing any WorldState to be enqueued twice. This doesn’t work. See the FAQ for more.

A second optimization: To avoid recomputing the estimatedDistanceToGoal()

result from scratch each time during various priority queue operations, compute

it at most once per object; save its value in an instance variable; and return

the saved value as needed. This caching technique is broadly applicable:

consider using it in any situation where you are recomputing the same quantity

many times and for which computing that quantity is a bottleneck operation.

Board

For the second part of this assignment, you’ll implement the Board class, allowing your Solver from part 1 to solve the 8-puzzle.

Organize your program by creating an immutable Board class with the following API:

public class Board implements WorldState {

public Board(int[][] tiles)

public int tileAt(int i, int j)

public int size()

public Iterable<WorldState> neighbors()

public int hamming()

public int manhattan()

public int estimatedDistanceToGoal()

public boolean equals(Object y)

public String toString()

}

Where the constructor and methods work as follows:

Board(tiles): Constructs a board from an N-by-N array of tiles where

tiles[i][j] = tile at row i, column j

tileAt(i, j): Returns value of tile at row i, column j (or 0 if blank)

size(): Returns the board size N

neighbors(): Returns the neighbors of the current board

hamming(): Hamming estimate described below

manhattan(): Manhattan estimate described below

estimatedDistanceToGoal(): Estimated distance to goal. This method should

simply return the results of manhattan() when submitted to

Gradescope.

equals(y): Returns true if this board's tile values are the same

position as y's

toString(): Returns the string representation of the board. This

method is provided in the skeleton

Note that the class will also support an isGoal() method that returns true

if the board is the goal board. This is implemented for you already in the

interface, so you should not reimplement it in your Board class.

The neighbors method is arguably a bit tedious. If you want, you’re welcome to use our solution. If you do this, make sure to cite your source (like you should every time you get significant help from anyone else online or in person).

Corner cases: You may assume that the constructor receives an N-by-N array

containing the N2 integers between 0 and N2 − 1, where 0

represents the blank square. The tileAt() method should throw a

java.lang.IndexOutOfBoundsException unless both i and j are between 0 and N −

1.

Performance requirements: Your implementation should support all Board methods in time proportional to N2 (or faster) in the worst case.

Goal Distance Estimates

We consider two goal distance estimates:

- Hamming estimate: The number of tiles in the wrong position.

- Manhattan estimate: The sum of the Manhattan distances (sum of the vertical and horizontal distance) from the tiles to their goal positions.

For example, the Hamming and Manhattan estimates of the board below are 5 and 10, respectively.

8 1 3 1 2 3 1 2 3 4 5 6 7 8 1 2 3 4 5 6 7 8

4 2 4 5 6 ---------------------- ----------------------

7 6 5 7 8 1 1 0 0 1 1 0 1 1 2 0 0 2 2 0 3

initial goal Hamming = 5 + 0 Manhattan = 10 + 0

The Manhattan estimate will always be greater than or equal to the Hamming estimate. Both estimates will always be less or than equal to the true distance (try to convince yourself why).

A Deeper Look at A* (optional)

As an aside, we can make a key observation which gives insight into why A* works: To solve the puzzle from a given search node on the priority queue, the total number of moves we need to make (including those already made) is at least its priority, using either the Hamming or Manhattan estimate. (For the Hamming estimate, this is true because each tile that is out of place must move at least once to reach its goal position. For the Manhattan estimate, this is true because each tile must move its Manhattan distance from its goal position. Note that we do not count the blank square when computing the Hamming or Manhattan estimates.) Consequently, when the goal board is dequeued, we have discovered not only a sequence of moves from the initial board to the goal board, but one that makes the fewest number of moves. (Challenge for the mathematically inclined: prove this fact.)

One way to view the computation is as a game tree, where each search node is a node in the game tree and the children of a node correspond to its neighboring search nodes. The root of the game tree is the initial search node; the internal nodes have already been processed; the leaf nodes are maintained in a priority queue; at each step, the A* algorithm removes the node with the smallest priority from the priority queue and processes it (by adding its children to both the game tree and the priority queue).

Solver Test Client

We’ve provided two puzzle solvers that use your Solver class. These are

EightPuzzleSolver.java and WordPuzzleSolver.java. Do not modify these

files. 8-Puzzle input files are provided in the input folder.

The input and output format for a board is the board size N followed by the N-by-N initial board, using 0 to represent the blank square. An example of an input file for N = 3 would look something like this:

3

0 1 3

4 2 5

7 8 6

Your program should work correctly for arbitrary N-by-N boards (for any 1 < N < 32768), even if it is too slow to solve some of them in a reasonable amount of time. Note N > 1.

To test against input in IntelliJ, set the command line arguments up using the Edit Configurations

option (in the spirit of building independence see

Google).

As an example, if I tested against an input file input/test01.in with the

following contents:

2

1 2

0 3

I should get the following output:

Minimum number of moves = 1

2

1 2

0 3

2

1 2

3 0

EDIT: 3/30/2018: I’ve added a more exhaustive set of local tests in the file TestSolver.java. This tester automatically tries a bunch of puzzles. If you need to understand why a particular test fails, please use the debugger, possibly along with one of the two solver test clients described above.

Optional

Try modifying the Word class so that neighbors is constant time instead of

linear time. Try out the solver with a large words file, for example the one you

used for project 1A. Report any cool findings on Piazza.

Optional Ultra-Edition

Try implementing other WorldState puzzles and show that your Solver is capable

of solving them. Examples include maze traversal, path finding for video game

AI, and solving a Rubik’s Cube.

FAQ

Why am I getting cannot resolve symbol ‘BoardUtils’?

File -> Project Structure -> Libraries -> (+) sign to add new Java Library -> Select your login/hw4 directory DO NOT USE login/hw4/hw4/puzzle -> OK -> OK -> OK.

These are the steps needed for Macs. I suspect there won’t be big differences for other operating systems. –>

The autograder says I’m using too many moves on a puzzle but when I try it locally it works fine.

Chances are that the autograder is breaking ties in a different order and the bug

in your code only very rarely occurs. Try running CommonBugDetector. The most

common reason this happens is that you are trying to avoid revisiting old

WorldStates but you are doing so in a manner that breaks our search algorithm.

The neighbors() method provided doesn’t work. It looks like it only returns the initial board.

It works, but it does depend on the board being immutable. See the provided

TestBoard.java to verify that your Board class is immutable.

How do I know if my Solver is optimal?

The shortest solution to puzzle4x4-hard1.txt and puzzle4x4-hard2.txt are 38 and 47, respectively. The shortest solution to “puzzle*[T].txt” requires exactly T moves. Warning: puzzle36.txt, puzzle47.txt, and puzzle49.txt, and puzzle50.txt are relatively difficult.

My program is too slow or runs out of memory on some of the large sample puzzles. Is this OK?

You should not expect to solve many of the larger puzzles with the Hamming priority function. However, you should be able to solve most (but not all) of the larger puzzles with the Manhattan priority function. Which puzzles your program is capable of solving may vary widely based on your code and computer.

What size puzzles are we expected to solve?

Here are the puzzles you are explicitly expected to solve:

input/puzzle2x2-[00-06].txt

input/puzzle3x3-[00-30].txt

input/puzzle4x4-[00-30].txt

input/puzzle[00-31].txt

Edit (3/30/2018): The provided TestSolver.java file will test all of these puzzles.

Even with the critical optimization, the priority queue may contain two or more search nodes corresponding to the same WorldState. Should I try to eliminate these with something like a HashSet of previously used states?

In principle, you can avoid revisiting WorldStates by somehow “marking” them after they’ve been used. For example, you can create a HashSet<WorldState> to record all marked WorldStates. It is possible to do this, but be warned: this is beyond the scope for CS61B, so it’s going to feel very hand-wavy.

The key issue is that you shouldn’t consider a state to be “used” until it is dequeued.

In other words, if you DO attempt to do this, you should only “mark” a WorldState when it is dequeued from the PQ, not when it is enqueued! The reason for this is beyond the scope of 61B (see CS188 for more!), but the rough intuition behind this is as follows: If you’re at a move sequence (a.k.a. SearchNode) that ends at WorldState X and you see that WorldState G is one of X’s neighbors, it’s not safe to assume that this is the best path, and therefore it’s not safe to enqueue X->G and then subsequently disallow all other paths that end in G.

As a crude analogy, imagine trying to compute driving directions to the Eiffel Tower by looking at a bunch of pictures taken from various locations: Just because you can see the Eiffel Tower from a picture of location X doesn’t mean that location X is along the best route to your destination, so you can’t just give up the first time you see the Eiffel Tower in a picture.

This approach is not required! The critical optimization described in the spec is enough. However, you’re welcome to try this out and see if it helps. One potential pitfall is that your code may use too much memory, which may make the autograder crash in weird ways.

Note: If you take 188, you’ll learn that this version of A* is called A* Graph

Search, whereas the version given in the spec is called A* Tree Search.

Edit (4/1/2018): This answer made more verbose since a few students on Piazza seemed be trying this instead of the critical optimization.

The puzzles work fine on my computer, but not on the AG. I’m getting a GC overhead limit exceeded error, or just a message that the “The autograder failed to execute correctly.”

Your computer is probably more powerful than the autograder. Notably, the AG has much less memory. You should be able to complete puzzles 30 and 31 in less than a second, and they should also work if you use only 128 megabytes of memory. To run your code with only 128 megabytes, try running your code with the following commands. Unfortunately, there’s no easy way to do this in IntelliJ. For directions in IntelliJ see this link. Otherwise, you can try running your code from the command line.

java -Xmx128M hw4.puzzle.EightPuzzleSolver ./input/puzzle30.txt

java -Xmx128M hw4.puzzle.EightPuzzleSolver ./input/puzzle31.txt

java -Xmx128M hw4.puzzle.EightPuzzleSolver ./input/puzzle4x4-30.txt

If your code is taking longer, by far the most likely issue is that you are not implementing the first critical optimization properly. Another possiblity is that you are creating a hash table of every board ever seen, which may cause the AG computer to run out of memory.

It is not enough to simply look at your code for the optimization and declare that it is correct. Many students have indicated confidence in their optimization implementation, only to discover a subtle bug. Use print statements or the debugger to ensure that a board never enqueues the board it came from.

Situations that cover 98% of student performance bugs:

- Recall that there is a difference between

==andequals. - Recall also that the optimization is that you should not “enqueue a neighbor if its board is the same as the board of the previous search node”. Checking vs. the current board does nothing, since a board is never its own neighbor.

How do I ensure my Board class immutable?

The most common situation where a Board is not immutable is as follows:

- Step 1: Create a 2D array called cowmoo.

- Step 2: Pass cowmoo as an argument to the Board constructor.

- Step 3: Change one or more values of cowmoo.

If you just copy the reference in the Board constructor, someone can change the

state of your Board by changing the array. You should instead make a copy of the

2D array that is passed to your board constructor. Try running TestBoard to

ensure your board is immutable.

Why can’t Gradescope compile my files even though I can compile them locally?

Due to the nature of the autograder, you cannot use any public Board and Solver methods that were not mentioned in the spec. Consider moving the logic into one file.

The AG is reporting a bug involving access$ or some kind of null pointer exception. What’s going on?

It’s important that your moves and solutions methods work no matter the

order in which they are called, and no matter how many times they are called.

Failing the mutability test, or failing only moves but not solutions tests

are sure signs of this issue.

Credits

This assignment was inspired by a somewhat similar assignment created by Kevin Wayne and Bob Sedgewick at Princeton University.